In my application, I need to use a custom second level cache。

In Hibernate 5, complete SQL is used as the cached key. All operations are running normally.

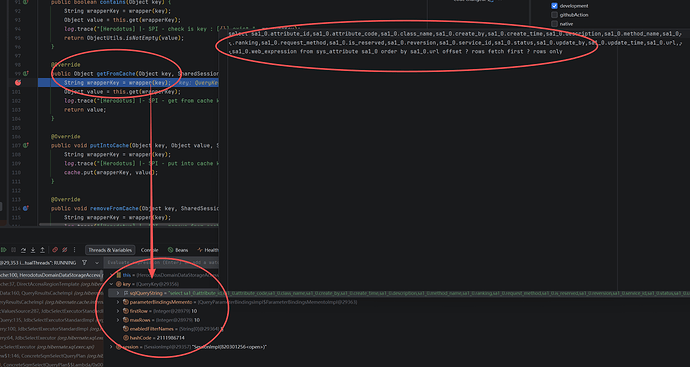

In Hibernate 6, change to using QueryKey. Moreover, currently only the return value of the generateHashCode() method in QueryKey can be used as the Key. This is not a problem for non paginated queries.

However, because the generateHashCode() method does not include the values of firstRow and maxRows, it can result in cached keys being the same when paginated queries are performed.

The problem presented is actually the inability to paginate.

private int generateHashCode() {

int result = 13;

result = 37 * result + sqlQueryString.hashCode();

// Don't include the firstRow and maxRows in the hash as these values are rarely useful for query caching

// result = 37 * result + ( firstRow==null ? 0 : firstRow );

// result = 37 * result + ( maxRows==null ? 0 : maxRows );

result = 37 * result + parameterBindingsMemento.hashCode();

result = 37 * result + Arrays.hashCode( enabledFilterNames );

return result;

}

My temporary solution is to release comments and overwrite the code. Afterwards, the pagination query ran normally.

private int generateHashCode() {

int result = 13;

result = 37 * result + sqlQueryString.hashCode();

// Don't include the firstRow and maxRows in the hash as these values are rarely useful for query caching

result = 37 * result + ( firstRow==null ? 0 : firstRow );

result = 37 * result + ( maxRows==null ? 0 : maxRows );

result = 37 * result + ( tenantIdentifier==null ? 0 : tenantIdentifier.hashCode() );

result = 37 * result + parameterBindingsMemento.hashCode();

result = 37 * result + Arrays.hashCode( enabledFilterNames );

return result;

}